Classifier integration

Integrated learning

integrated learning (Ensemble Learning) By building and combining multiple learners to complete learning tasks, sometimes referred to as multi-classifier Multi-Classifier System, based on the committee's learning (committee-based learning).

Figure 1 shows the general structure of integrated learning: first generate a "individual learning device", combine them with some strategy. Individual learning is usually generated from training data from training data, such as C4.5 decision algorithms, BP neural network algorithms, etc. At this time, only the same type of individual learner is included, such as "decision tree integration" China is the decision tree, "Neural Network Integration" is all neural network, such integration is "homogeneous". Individual learning in homogeneous integration is also known as "base learning". The corresponding learning algorithm is called the "base learning algorithm". Integration can also include different types of individual libraries, for example, while including decision tree and neural networks, such integration is called "heterogeneous". Individual learners in heterogeneous integration are generated by different learning algorithms. At this time, there is no longer a base learning algorithm, often referred to as "component learning" or directly referred to as individual learning.

Integrated learning By combining multiple learners, it is often available more significantly than a single learner. This is particularly obvious for "weak learning". Therefore, the theoretical study of integrated learning is for the weak learning, and the base learner is sometimes referred to as a weak learning device. However, it should be noted that although it is theoretically use a weak learning integration enough to achieve good performance, in practice, in practice, if you want to use less individual learning, or reuse some common learning Some experiences, etc., people tend to use a relatively strong learning.

In general experience, if it is doped with something good and bad, then usually the result will be better than the worst, better than the best. Integrated learning combines multiple learners, how can I get better performance than the best single learner?

classifier integrated example

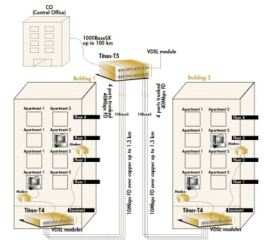

Consider a simple example: In the two-point task, assume that the performance of the three classifiers in three test samples is shown in Figure 2, where represent Classification is correct, × indicates classification errors, and the results of integrated learning are generated by voting, that is, "a few obeys from most". In Fig. 2 (a), only 66.6% of each classifier, but integrated learning is 100%; in Figure 2 (b), the three classifiers have no difference, and the performance is not improved after integration; In 2 (c), only 33.3% of the accuracy of each classifier, integrated learning is worse. This example shows that if you want to get a good integration, the individual learner should "good and different", that is, the individual learner has certain "accuracy", that is, the learner cannot be too bad, and there must be "diversity", That is, there is a difference between the learner.

analysis:

Consider the second classification problem

assumptions classifier error independently , it can be seen from HoEffding inequality, integrated error rate is:

However, we must notice that there is a key assumption in the above analysis: the error of the base learner is independent of each other. In a realistic task, an individual library is to solve the same problem training, which is obviously impossible to independence. In fact, there is a conflict with the "accuracy" and "diversity" of the individual learning. Generally, after the accuracy is high, it is necessary to sacrifice accuracy.

In fact, how to generate and combine "Good and Different" individual learning, it is the core of integrated learning research.

Combined strategy

Average method

For numerical class regression prediction issues, commonly used combined strategies are average method, that is, for several and weak The output of the learner will give the final predictive output.

The simplest average is an arithmetic average, that is, the final prediction is

If each individual learning has one Right

voting method

For the prediction of the classification problem, we usually use the voting method. Suppose our predictive category is

The simplest voting method is relatively larger voting method, that is, the minority obey of us often said that the number of sample X is the most specifically, the number of categories

The slightly complex voting method is absolutely most voting, that is, what we often have to pass over half. On the basis of relatively large number of voting, the highest ticket is not toned, and the ticket is required to pass over half. Otherwise it will refuse forecasts.

More complex is the weighted voting method, like the weighted average method, each weak learning ticket is multiplied by one weight, and finally sum up the number of weighted tickets, the maximum value of each category Category is the final category.

Learning method

The average or voting of the weak learner is relatively simple, but there may be a big learning error, so there is a way of learning. For the learning method, the representative method is Stacking. When using the Stacking combined strategy, we are not a simple logic process of the result of the weak learner, but plus a layer of learning, that is, we will train weakened The learning results of the learning unit are input as input, and the output of the training set is used as an output, and a learner is retransmitted to get the final result.

In this case, we refer to the weak learner as a primary learning, which will be called a secondary learner. For test sets, we first use primary learning to predict once, get the secondary learning input sample, and then predict the secondary learning unit to get the final forecast results.

Typical integrated learning method

According to the individual learning, integrated learning methods can be roughly divided into two categories: ie there is a strong dependence between individual learning, must be serialized The serialization method and the individual library have no strong dependencies, which can simultaneously generate parallelization methods; the former represents Boosting, the latter representative is Bagging and "random forest".

Latest: Neutron capture

Next: Terrorism